The really hard part of APC is the internal locking code it has - it's not that hard to do, just hard to figure out if you've done it wrong. And I'm just about to really mess around with the assembly spin locks and pthread mutex locks to make them cross-process locks which live in shared memory (remember that "volatile" keyword in C?). The other couple of lock modes are already cross-process and slow (because of the syscall). If these work right, I won't really have to cripple the fast part of the code to implement the features I have in mind.

But before I start to go MIA into the locking code, I'd like to get my testing in place. So I've written a small and tiny test app called lockhammer - read the makefile and please run it on every platform you want APC to work. (make APC_DIR=~/apc link; make)

The code in lockhammer.c should be easily understood - basically it allocates some shared memory, creates a lock in it, forks, re-attaches the memory in each process. Every process is a loop of lock, write PID into shm, sleep, check the PID. In case someone has a better idea of how to test locks, I'll also like modifications to it, in case any of you think there's some corner case I missed (yes, random sleep & random fork-order is also on my list of TODOs).

Fundamentally, the information about locks is privately held within the lock type code in APC. The information needs to be moved into a shared mode (or at least, transparent) for multiple un-related processes to be able to share the cache without collisions. Eventually, you should be able to use APC in a standard FastCGI deployment without allocating a cache per-process.

And if you're a user, I'd like read something other than a bugreport, occasionaly.

--They're gonna lock me up and throw away the key!

Finally, after nearly a year of work, it's into a release. Some new stuff has sneaked into it undocumented, that people might find interesting - apc.preload_path would be one of them. The backend memory allocation has been re-done - the api part by me and the internals by shire. There's a hell of a lot of new code in there, both rewritten and added. Tons of php4 cruft removed, php5 stuff optimized, made more stable, then less stable, made faster, then applied brakes. Made leak-proof, quake-proof and in general, idiot-proof. So, on & so forth.

apc/ $ cvs diff -u -N -r HEAD -r RELEASE_3_0_19 | diffstat /dev/stdin 68 files changed, 3255 insertions(+), 5545 deletions(-)

Sorry about the b0rked 3.1.1 release, so please test this one! :)

--Each new user of a new system uncovers a new class of bugs.

-- Kernighan

That annoying file descriptor leak that snuck into 3.0.17 has finally been laid to rest. A few double free issues were fixed as I spent quite a long time staring at the same code, till enlightenment hit me like a clue bat. Along with that, there are a bunch of quickfixes for 5.3 quirks. I'm not happy with those, but this is the 3_0 stable branch and by the time 5.3 is popular enough the HEAD should be taking care of those problems. The build is broken in VC++ in this release, but excluding apc_pool.c from the build should work.

Expect more changes... as soon as I get back home.

--Delay always breeds danger and to protract a great design is often to ruin it.

-- Miguel De Cervantes

In response to CVE-2008-1488, APC 3.0.17 has just been pushed out with the requisite security fixes. But in the process of producing a php4 compatible release, a significant amount of code has been reverted in the merge into an APC_3_0 branch for future bugfixes.

I've spent a couple of hours unmerging my "bye bye php4" cleanups with the help of Kompare. And my sanity is simply due to the fact that I can "cvs diff -u | kompare -" to look at the resulting huge patch. But it is not unpossible that the new code merged from HEAD has regressions, so you could also apply the unofficial patch onto 3.0.16.

--I took your advice and did my own thing. Now I've got to undo it.

I got a nice little present for Christmas.

It had +4 lines, a huge mail explaining why and made me feel happy & stupid at the same time (there's some correlation, I think).

The patch fixes a one-off error in the APC shm allocator (read my mail for a shorter paraphrasing) and has triggered the 3.0.16 release. Now, APC should be stable even when running under cache full/heavy load conditions. And I've been barking up the wrong tree of race conditions for months & months. But the important thing is that this is fixed now.

Merry XMas and a happy New Year! [ citation needed ]

--Patience is a minor form of despair, disguised as virtue.

-- Ambrose Bierce

APC 3.0.15 has been released - read the release announcement. Not too many changes since 3.0.14, but there's a reason it took this long to make so few changes.

To begin with, I've just been lazy. Just kidding! This release was actually delayed to make sure this could be the very last PHP4 release of APC. And with the amount of major changes coming in, the next release is definitely going to be 3.1.0 rather than a 3.0.16 release. Now I can start working on making some fairly big changes.

Bye Bye PHP4, it was a nice ride while it lasted.

--While most peoples' opinions change, the conviction of their correctness never does.

PHP programmers don't really understand PHP.

They know how to use PHP - but they hardly know how it works, mainly because it Just Works most of the time. But such wilful ignorance (otherwise known as abstraction) often runs them aground on some issues when their code meets the stupidity that is APC. Bear with me while I explain how something very simple about PHP - how includes work.

Every single include that you do in PHP is evaluated at runtime. This is necessary so that you could technically write an include inside an if condition or a while loop and have it behave as you would expect. But executing PHP in Zend is actually a two step process - compile *and* execute, of which APC gets to handle only the first.

Compilation: Compiling a php file gives a single opcode stream, a list of functions & yet another list of classes. The includes in that file are only processed when you actually execute the code compiled. To simplify things a bit, take a look at how the following code would be executed.

<?php return; include_once "a.php"; ?>

The PHP compiler does generate an instruction to include file "a.php", but since the engine never executed it, no error is thrown for the absence of a.php. Having understood how includes work, classes & OOP face a unique problem during compilation.

<?php

include_once "parent.php";

class Child extends Parent

{

}

?>

Even though the class Child is created at compile time, its parent class is not available in the class table until the include instruction is actually executed & the parent.php compiled up. So, php generates a runtime class declaration which is an actual pair of opcodes.

ZEND_FETCH_CLASS :1, 'Parent' ZEND_DECLARE_INHERITED_CLASS null, '<mangled>', 'child

But what if the class parent was already in the class table when the file was being compiled? Like the following index.php

include_once "parent.php"; include_once "child.php"; $a = new Child();

Since obviously the parent class is already compiled & ready, Zend does something intelligent by removing the two instructions and replacing them by NOPs. That makes for fewer opcodes and therefore faster execution.

Here's the kicker of the problem. Which of these versions should APC cache? Obviously, the dynamically inherited version is valid for both cases - but APC caches whatever it encounters initially. The static version is obviously incompatible in a dynamic scenario.

So whenever APC detects that it has cached a static version, but this case actually requires a dynamic version, it decides to not cache that file *at* all from that point onwards. That's what the APC autofiltering does.

Now, you ask - how could it appear in perfectly normal code?

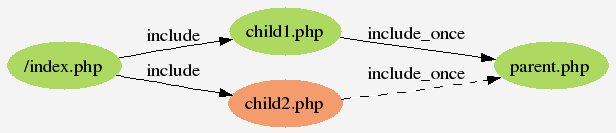

Assume child1 and child2 inherits from parent class. And here is how the first hit on index.php looks like from an inclusion perspective. Now, it is obvious that the child2 in this case is actually compiled with the faster static inheritance (marked in orange) while child1 suffers the performance hit of not having Parent available till execution time.

Then we have a profile.php which only requires the child2 class. But while executing this file, APC fetches the copy of child2.php which was in cache - which is the statically inherited one.

As you could've guessed, the cached version is not usuable for this case - and APC drops it out of cache. And for all requests henceforth, even for the index.php case, APC actually ignores the cached version and insists on compiling the file with Zend. If you enable apc.report_autofilter, this information will be printed out into the server error log.

Part of the culprit here is the conditional inclusion using include_once. With mere includes, you get an error whenever parent.php is included multiple times - but that can be annoying too. Where include_once/require_once can be debugged with Inclued, userspace hacks like the rinclude_once or !class_exists() checks make it really hard for me to figure out what's going wrong.

So, if you write One File per Class PHP and use such methods of inclusion, be prepared to sacrifice a certain amount of performance by doing so.

--Doubt is not a pleasant condition, but certainty is absurd.

-- Voltaire

Yak Shaving: So you start out with that simple problem. But half-way through fixing it, it explodes into this whole exercise in pointless dependencies. It is a rather recent wordification (never heard of that word ? it's a perfectly cromulent word). But considering the fate of the "pre-shaved yaks" guy, who ended up saying "It's a band.", I'd say it is not quite popular enough ... yet.

Now. before I start onto the real topic - let me first say that the next release of APC will be the last release compatible with PHP 4.x. Now, what is wrong with just letting the #ifdefs stay ? That's where this snippet of code comes into play.

<?php

apc_store("a", array(new stdclass()));

print_r(apc_fetch("a"));

?>

It doesn't work. Now, the problem is very simple - the original patch by Marcus only checks for objects in a very shallow way. It will detect & serialize objects which are passed to apc_store - but the check does not extend deeper into the recursive copy functions.

Symmetry: But the zval* copy functions were written to be beautifully symmetric. A copy into cache is nearly the same as copying out of it. And when I say "nearly", I actually mean that until the *_copy_for_execution() optimisations were thrown in, they were actually symmetric - in & out. But objects don't play nicely with that - because they are much more than just data.

In & Out: Objects require assymmetric caching. Storing into cache is a serialize operation, while retrieving from storage is a deserialize. This ensures that they end up with the right kind of pointers, class object initialization and that the resources they hold in their opaque boxes are properly handled. The objects have to implement their appropriate magic persistance methods.

And thus begins the Yak Shaving. I need to rewrite most of the cache copy-in and copy-out functions to handle the basic assymetry. But consider this, most of the code in there has been limited for months because of the fact that I cannot optimize on PHP data structures without breaking the symmetry.

A couple of years ago, I sat through a full hour talk by Rusty Russell about talloc(). Built on top of the trusty old malloc() calls, it simplifies memory management a lot for Samba4. So bear with me as I take a brain dump of my idea - for my very intelligent reader to poke holes in (gopalv shift+2 php net).

APC's allocation strategy is a little brain dead. To allocate 4 bytes of data, it actually requires 24 bytes of space. But much more than the space wastage, I'm more concerned about the number of lock() calls required to cache a single php file - a hello world program takes about 22 lock operations (11 locks, 11 unlocks). Yes, that's actually 22 syscalls just to cache echo "hello world";.

I've previously tried to fix it with partitioned locks. The problem with that was actually cleaning up the locks, because the extension code would have to have special cases for every SAPI - because of some bugs in PHP 5.x. So, the "if you don't succeed, destroy all evidence" principle made me throw out that idea. But the cache-copy, zend-copy separation should help me revive another approach to this.

Pools: So, now that I'm officially b0rking up APC, I could as well slap on a new pool allocator, right on top of sma_allocate - ala, talloc(). The allocation speed would skyrocket, because the in-pool allocs are sequential and do not have any fragmentation issues due to blocks in the middle being free'd. As much as allocates are important, the real advantage of this would be that I could basically speed up cache expunges by a magnitude or more. The 22 syscall cache expunge for hello world would be reduced to a potential pair of syscalls - because it would be a single free of the entire pool space.

Right now the pool is actually built up to be of the following structure.

struct apc_pool_t {

int capacity;

int avail;

void *head;

apc_pool_t *overflow;

unsigned char data[0];

};

I've yet to run this through an x86_64 build, but an even multiple of int/void* should align data area right into a wordsize. And I think nearly every pool should be around 4k (i.e 4096 - sizeof(apc_pool_t)) for opcode cache and 1k for data cache. I might make the latter a runtime tuneable, just to pad the APC manual up into an entire book (just in case someone asks me to write one .. *heh*).

None of this is included in APC 3.0.15, which will exit out of the gates as soon I'm sure I'm happy with its stability. The new code will probably be an APC 3.1 release, marking the end of php4 compat & opening up the door for php6 compat.

A two line bug report which exploded into nearly two thousand lines of C code - that's just classic yak shaving.

--10 If it ain't broke, break it;

20 Fix it.

30 Goto 10

Brian Shire has put up his slides (835k PDF) of his php|tek talk.

Quite interesting procedures followed to prevent the very obvious cache slam issues by firewalling the apache while restarting it, as well as the priming sub-system they use. Also the cross-server (aka site-vars) seem like a good idea as well - a basic curl POST request moving around json data could potentially serve as a half-reliable cross-server config propogator.

Seeing my code used makes happy ... very happy, indeed.

--I'm willing to make the mistakes if someone else is willing to learn from them.

APC user cache is cool. It provides an easy way to cache data, in the convenient form of hashes & more hashes within them, to share the data across processes. But it does lend itself to some abuses of shared memory which will leave your pager batteries dead, disk full of cores and your users unhappy. Eventually, the blame trickles down to splatter onto APC land. Maybe people using it didn't quite understand how the system works - but this blog entry is me washing my hands clean of this particular eff-up.

apc_fetch/_store: The source of the problem is how apc_fetch and apc_store are used in combination. For example, take a look at countries.inc from php.net. The simplified version of code looks like this.

if(!($data = apc_fetch('data'))) {

$data = array( .... );

apc_store('data', $data);

}

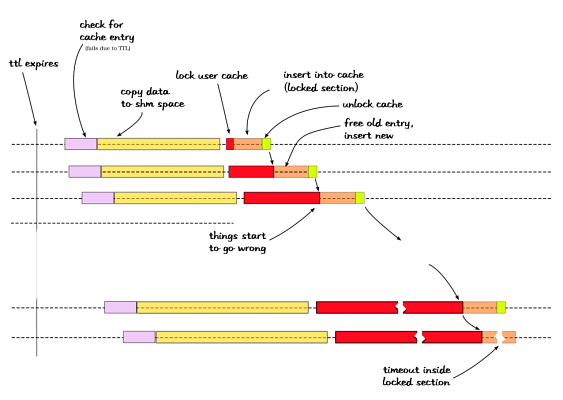

There is something slightly wrong with the above code, but the window for the race condition is too small to be even relevant. But all that changes the moment a TTL is associated with the user cache entry. For a system under heavy load, all hell breaks loose when a user cache entry expires due to TTL. Let me explain that with some pretty pictures and furious hand-waving.

Each of the horizontal lines represent an individual apache process. The whole chain reaction is kicked off by a cache entry disappearing off the user cache. Every single process which hits the apc_fetch line (as above), now falls back into the corresponding apc_store. The apc_store operation does not lock the cache while copying data into shared memory. So all processes are actually allowed to proceed with the copy into shared memory (yellow block) in parallel. The actual insertion into the cache, however is locked. The lock is hit nearly simultaneously by all processes and sort of cascades into blocking the next process waiting on the lock.

Lock, lock, b0rk !: The cascade effect of waiting on the same lock eventually results in one process locking for so long that it hits the PHP execution timeout Or the user get bored and just presses disconnect from the browser. In apache prefork land, these generate a SIGPROF or SIGPIPE respectively. If for some reason that happens to be while code inside the locked section is being executed, apache might kill PHP before the corresponding unlock is called. And that's when it all goes south into a lockup.

So, when I ran into this for the first time, I did what every engineer should - damage limitation. The signals were by-passed by installing dummy signal handlers and deferring the signals while in locked sections. Somebody needs to rewrite that completely clean-room, before it is going to show up in the pecl CVS. The corresponding cache slam in the opcode cache is controlled by checking for cache busy and falling back to zend_compile - but the user cache has no such fallbacks.

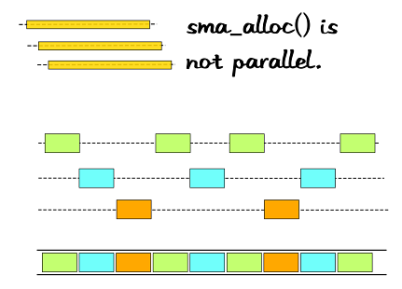

I wish that was the only thing that was wrong with this. But I was slightly misleading when I said the copy into shared memory was parallel. The shared memory allocator still has locks and the actual allocation looks somewhat like this for 3 processes.

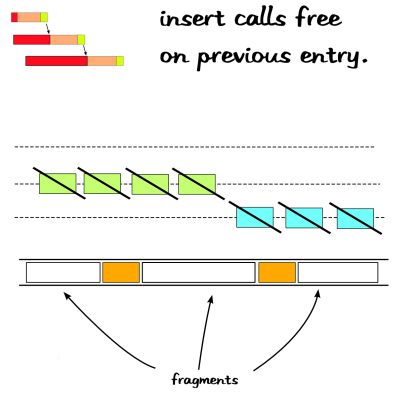

The allocations are interleaved both in time and in actual layout in memory (the bottom bar). So adjacent blocks actually belong to different processes, which is not exactly a very bad thing in particular. But as the previous picture illustrates, every single apc_store() call removes the previous entry and free's the space it occupies. Assuming there are only three processes, the free operation happens as follows.

The process results in very heavy fragmentation, due to the large amount of overlap between the shared memory copy (apc_cache_store_zval) across processes. The problem neatly dove-tails into the previous one as the allocate & deallocate cost/time increases with fragmentation. Sooner or later, your apache, php and everything just gives up and starts dying.

There are a couple of solutions to this. Since APC 3.0.12, there is a new function apc_add which reduces the window for race conditions - after the first successful insertion of the entry, the execution time of the locked section is significantly reduced. But this still does not fix the allocation churn that happens before entering the locked section. The only safe solution is to never call an apc_store() from a user request context. A cron-job which hits a local URL to refresh cache data out-of-band is perfectly safe from such race conditions and memory churn associated.

But who's going to do all that ?

--In the Beginning there was nothing, which exploded.

-- Terry Pratchett, Lords and Ladies

The great and awesome teemus has come up with a theme song for APC. Set to the background score of Akon's Smack That !, it goes something like this.

Cache that, give me some more, Cache that, don't dump some core, Cache that, don't hit the _store, Cache that, ~ oohoooh ~

In a sort of related note, I might be singing this somewhere.

--Everything in nature is lyrical in its ideal essence, tragic in its fate, and comic in its existence.

-- George Santayana

For any php performance freak, include_once has been a pain in the neck for a long time. In a previous post I had talked about how the common workarounds affect something like APC. With the release of APC 3.0.14, there is a decent workaround which doesn't require any changes to the php code. But first, let me drag out another almost-workaround to the whole include_once problem.

rinclude_once: So we create a new function for the purpose. Let me just call it rinclude_once and use that everywhere. The function takes in the filenames, pushes it into a hash before including it.

function rinclude_once($file)

{

global $rinclude_files;

if($file{0} != '/') return include_once($file);

if(isset($rinclude_files[$file])) return;

$rinclude_files[$file] = true;

include($file);

}

This bit of code works - but only for absolute filenames. I put it through its paces without apc against include_once. Surprisingly, without APC, this code is slower than the php engine's include_once checks. That somewhat makes sense because the extra include for the rinc.php makes a slight dent into the compile and execution time, overshadowing the cycles wasted in the include_once syscall land.

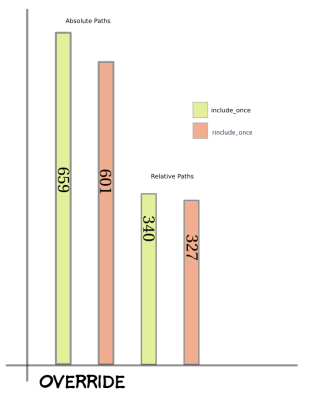

Excluding the slight blip in performance, both the bits of code are nearly neck-to-neck. The real cost of include_once is only evident when you throw in APC. For every file which was included, include_once opens the file before checking for multiple inclusions. The extra system call shows up in the graph below. But the rinclude_once does not work at all for relative path includes (the second pair of bars) and therefore trails badly in this race for performance.

include_once_override: The solution to this problem is freakish. APC meddles with the brains of the Zend interpreter and inserts its own version of ZEND_INCLUDE_OR_EVAL opcode handler. The new handler does not indulge in the gratuitous fopen idiocy present in the default handler (but it does fopen once) and checks for the filename in EG(included_files) hash before doing a normal include() (thank pollita for that). And it should come as no surprise that the C module outperforms the php land equivalent.

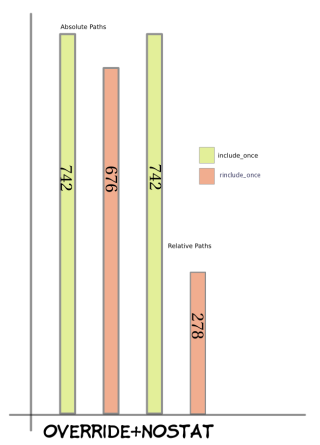

But all of these only work for absolute paths as the pathetic numbers on the relative path includes shows. That is where the path canonicalization kicks in. In APC's nostat mode, the filenames have to be absolute paths for the mode to be useful. But rather than force everyone to modify their include lines, APC rewrites the constant strings into the corresponding path names, after lookup. Essentially it converts all relative path includes, such as those from the pear paths, into absolute path includes. This works well with the stat=0 mode because file modifications are ignored after caching.

At last, we see both the relative and absolute includes touching the same level of performance - because they are no different from each other in opcode land. But as you can clearly see, that does not improve the performance of the relative include for the rinclude_once because it does dynamic includes. The opcode cache cannot determine what the value of $file will be for the include_once($file); line and cannot optimise that. The performance actually takes a dive because the relative path name passed in has to be full resolved for every request.

But having said all this, the same benchmark with plain includes is faster than any of these. I think there is a fair bit of optimisation left in this beast, but what is needed for that is the rest of the world to disappear while I code. Deep hack is hard to achieve when you're a ....

--You can't second-guess ineffability, I always say.

-- Good Omens

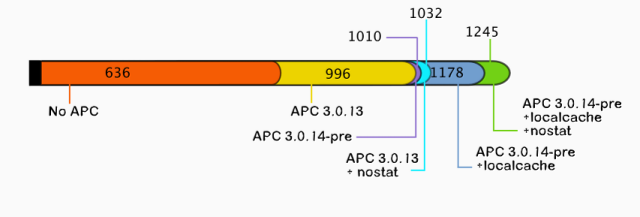

APC 3.0.14 (code named "A bigger boy made me do it, sir") went out a couple of days ago - read the release announcements. The major things in the release is a fair bit of performance improvements for those don't use threads. Also I've figured out a quick way to limit memory fragmentation when APC user cache (apc_fetch/_store) is heavily used - the new fraglimit fixes should solve all the small fragment issues with 3.0.13. And following my recent obsession with drawing pretty graphs for everything, here's how the old version looks compared to the latest code (requests per second for an include_once benchmark).

To get to such levels of performance, the code has some configuration parameters that can be set. The apc.localcache creates a process (yes, not thread) specific lockless cache which is basically a layered shadow cache ontop of the same shm data. The apc.include_override_once is also now usable because of the appropriate checks put in to reduce the overhead of include_once. And now, when you enable apc.stat there's a bit of code which pre-computes the path of the included file so that it can be effective for includes with relative paths or from include_path dirs.

The release is hopefully stable enough to provide someone with enough ramp-up time to get started, if I stop working full-time on APC. I've spent a fair bit of time stabilizing basic functionality and have kept most of these optimisations optional, to be able to look at other work for a while.

--Periods of productive stability, interrupted by bursts of test-bed change is much less disruptive than constant ripples of change.

-- Fred Brooks Jr, "The Mythical Man Month"

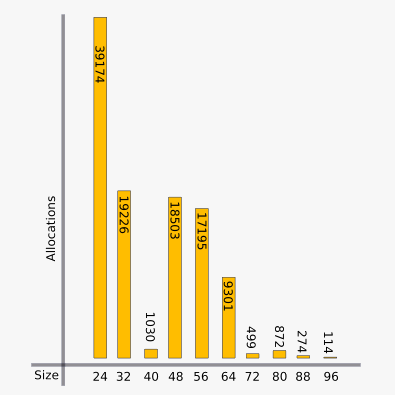

So, there I was debugging what looked like a memory leak in APC - a perfectly straightforward bug at first glance. APC's internal allocator was leaving around a bunch of 40 byte fragments all over the place. The fragments were literally killing APC allocate and deallocate performance - with nearly 85k fragments lying around in the 128Mb cache that www.php.net uses. Even though the allocator is a first-fit based system, it still has to traverse a large number of blocks to locate the previous free block to free any particular allocated block.

Basically, it was having serious issues with memory performance. This had something to do with one of the changes I'd put into APC-3.0.13 - canary checks. The canary essentially increased the memory header size by one size_t exactly. This broke the default word alignment on x86, but I thought I had all bases covered when I put in the approriate word aligns.

24 Bytes: Now, the default allocation size in APC is 24 bytes on x86. That is 12 bytes (3 x sizeof(size_t)) plus padding to make it a multiple of 8 coming to a total of 16 bytes. Then put in the data area (say, 1 byte), which is padded up to 8 bytes. Add all of it together and the smallest block APC can allocate is 24 bytes.

Due to some strange quirk of code, 40 bytes seems to be a very unpopular size to allocate. The allocations for 17-24 bytes of data goes into the 40 byte block and for some strange reason that seems really rare. I ran through a bunch of the standard tests I run with APC to get some sane statistics out of it. After running through hundred odd random tests from the standard phpt files, I got a pile of data. Twenty minutes later I had pulled that data together into a rough histogram (which is nearly the same thing as a bar chart for discrete data, I suppose) by printing out SVG and applying styling in inkscape.

Maybe I'm just hooked into drawing pretty graphs. But it clearly indicates what is going wrong. There are not enough 40 byte allocations to consume all the spare chunks being created. But, is this not true for the 80 or 96 blocks, you might ask. Unlike the 40 byte block both 80 (32 + 48) and 96 (48 + 48) byte blocks are easily consumed by requirements for smaller blocks. The 40 byte block on the other hand cannot be split into any smaller block because it is smaller than 24x2.

Thus due to the lack of demand and the inabilty to compromise (*heh*), the 40 byte blocks remain unwilling to accept any commitments. Until a memory block nearby is free'd the block will sit around waiting for someone to allocate 40 bytes - which as the pretty graph shows, is not a popular choice.

Now to sleep on this problem and hope I wake up with a solution - clear and perfect.

--If it breaks then you get to keep both pieces.

-- Warranty disclaimer for the chat program.

For every other php programmer who reads Rasmus's no framework mvc, these following lines are what they often finally remember.

3. Fast

* Avoid include_once and require_once

* Use APC and apc_store/apc_fetch for caching data that rarely changes

Eventhough include_once has its performance hit, some people avoid it by some rather simple code borrowed from their C experience. Here is how the code looks in general.

<?php if(defined(__FILE__)) return; define(__FILE__, true);

This is nearly identical to what you would use in a C header to prevent inclusion checks. But as the emergence of precompiled headers shows, even those folks are trying to reduce the expenditure of including & pre-processing the same file multiple times.

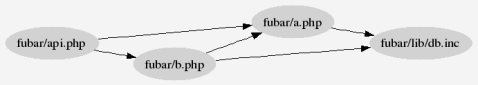

I do not deny that the include check above works. But it checks for double inclusion during execution, which is exactly what was wrong with include_once as well. Even worse, it hits APC really badly. But the situation takes a bit of context to understand - let us pick a 'real' library fubar (name changed to keep my job) which has been avoiding include_once. Here is how the logical dependency graph looks like :

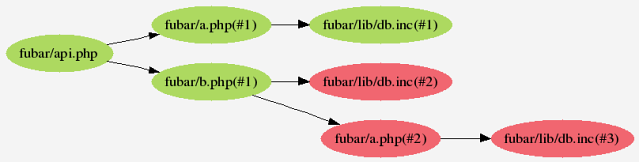

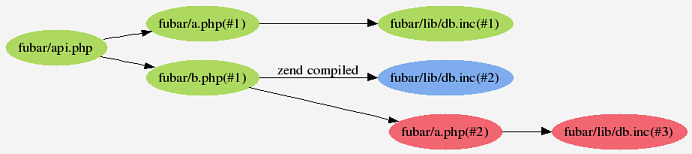

In a moment of madness, you decide to make all includes properly for design coherence, especially for that doxygen output to look purty. But instead of using include_once as a sane man should, you remember the wisdom of elders and proceed to do includes. And then kick it up a notch with inclusion checks as illustrated above. But this what Zend (and by design APC, too) actually compiles up.

The nodes marked in red are actually never used because of the inclusion checks, but they are compiled and installed. Zend pollutes the function table and class table for such with a bunch of mangled names for each function - APC serves up a local copy of the same cached file for multiple inclusions, which all have the same mangled name - by ignoring redeclaration errors.

If you were using include_once, these files would have never been compiled. But the above solution *seems* to work in APC land, but in reality does not play very nice at high cache loads. And while debugging *cough* fubar, I ran into a very corner case mismatch issue.

During a cache slam or expunge - when the cache is being written to by one process, other processes do not hit the cache and fall back to zend compile calls. Now imagine such a cache fail happening mid-way in one request.

Now the executor has two types of opcode streams to deal with, one which is Zend fresh ! and one which is from the APC (Opcodes in a Can) freezer. Even though only a couple of opcodes in the normal opcodes stream is executed, the pre-execution phase of installing classes and functions in their respective tables runs into issues unknown thanks to early binding and late binding combinations, which was behind that bug from hell in class inhertiance. But more annoyingly, I cannot reproduce them in ideal testing conditions - wasting about two nights of my sleep in the process.

So I implore, beg and plead - please do not write code like this to avoid include_once, it just makes it slower, heavier on your memory footprint and increases cache lock contention. At least don't do it in the name of performance - I wrote this blog entry just because the guy who wrote fubar said "I didn't know it worked this way". There are a bunch of other such gotchas, which is currently my talk proposal for OSCON '07.

And just out of curiousity, I'm wondering whether an apc.always_include_once might help such code. But on the other hand I hate optimising for bad code, much cleaner to drop such files from cache - after all "they don't the deserve the performance".

So, trust me when I say this ... leave include checks to the experts !

--Too much is more than enough by definition.

APC released version 3.0.13. The last couple of months haven't produced too much code from me, so most of the changes in there are due to the efforts of shire & rasmus. But I've left a couple of booby traps in there for invalid free() calls, which should reduce a decent number of those random memory corruptions into a more decent error report.

I've been unsuccessful in making the Real World go away for long enough to actually rewrite the shm allocator - not even a patch job with a linked list, rather than the mythical lockless allocator I've been promising for three months.

I feel guilty, but there's so much to be done and I'm only ... *counts* ... one man.

--After months of careful refrigeration, Debian 2.0 is finally cool enough to release.

-- topic on #debian

APC released version 3.0.12. Because I've been sitting at home, close to a well stocked refrigerator and with no pool table in walkable distance, I've got a fair bit of work done on APC [1].

And some out of this world patches in the pipeline too.

--Is it better to abide by the rules until they're changed or help speed the change by breaking them ?

A lot of people have been complaining about APC's stability issues. In fact, they get angrier when I mention that it works for Yahoo!. During the FIFA slams on the servers, we put in a few extra things in APC to make it withstand the hammering. Now, a couple of these protections were borrowed from code that Y! already had lying around and a few more of them were BSD specific. But the short story is that I can never push those changes to the open source version. Nor can I even rewrite the same features after reading Y! © code which does the same, at least not while I'm here.

*But*, one feature that we borrowed was discussed quite a while back on the php-internals mailing list. If someone among you think that they know enough to understand what this means and implement it under the php license, maybe it might be accepted as a patch to php.

All it needs is some elbow grease and a bit of unix magic :)

--signal(i, SIG_DFL); /* crunch, crunch, crunch */

-- Larry Wall in doarg.c from the perl source code

APC released version 3.0.11. I've been hunting the entire codebase for memory issues and even laid to rest the bug from hell.

Now, all that remains is for all new bug reports to come in.

--Your parity check is overdrawn and you're out of cache.

After much trials and tribulations, Wikipedia is finally using APC. They've been playing around with Turk MMcache and other accelerators for a while. But currently APC is the only one with the ball as far as caching is concerned. Recently, somebody did a benchmark on the common accelerators used in php land - read it here. But at that point APC just wins hands down, though my commit last night probably must've pushed APC below eAccelerator, it is required to run properly on a multi-CPU apache on high loads.

| hw.php | deserialize.php | include-pma.php | |

|---|---|---|---|

| eAccelerator | 1093 | 160 | 86 |

| apc | 1100 | 163 | 83 |

| PHP alone | 886 | 157 | 28 |

Now, the next heavy user of PHP around is sourceforge.net who is apparently still using eAccelerator. Apc still has a few chinks in its armour, but it is still *my* work. And much more importantly it, for once, doesn't appear doomed :)

--Real programs don't eat cache.