I had a very simple problem. Actually, I didn't have a problem, I had a solution. But let's assume for the sake of conversation that I had a problem to begin with. I wanted one of my favourite hacked up tools to update in-place and live. The sane way to accomplish is called SUP or Simple Update Protocol. But that was too simple, you can skip ahead and poke my sample code or just read on.

I decided to go away from such a pull model and write a custom http server to suit my needs. Basically, I wanted to serve out a page feed using a server content push rather than clients checking back. A basic idea of what I want looks like this.

twisted.web: Twisted web makes it really easy to write an http webserver without ever wondering how an HTTP protocol line looks like. With a sub-class of twisted.web.resource.Resource, a class gets plugged into the server in-place & ready to serve a request. So, I implement a simple resource like this.

from twisted.internet import reactor, threads, defer, task

from twisted.web import resource, server

class WebChannel(resource.Resource):

isLeaf = 1

def __init__(self):

self.children = {}

def render_GET(self, request):

return "Hello World"

site = server.Site(WebChannel())

reactor.listenTCP(4071, site)

reactor.run()

Controller: The issue with the controller is mainly with the fact that it needs to be locked down to work as a choke point for data. Thankfully twisted provides twisted.python.threadable which basically lets you sync up a class with the following snippet.

class Controller(object): synchronized = ["add", "remove", "update"] .... threadable.synchronize(Controller)

loop & sleep: The loop and sleep is the standard way to run commands with a delay, but twisted provides an easier way than handling it with inefficient sleep calls. The twisted.internet.task.LoopingCall is designed for that exact purpose. To run a command in a timed loop, the following code "Just Works" in the background.

updater = task.LoopingCall(update) updater.start(1) # run every second

Combine a controller, the http output view and the trigger as a model pushing updates to the controller, we have a scalable concurrent server which can hold nearly as many connections as I have FDs per-process.

You can install twisted.web and try out my sample code which is a dynamic time server. Hit the url with a curl request to actually see the traffic or with firefox to see how it behaves. If you're bored with that sample, pull the svgclock version, which is a trigger which generates a dynamic SVG clock instead of boring plain-text. I've got the triggers working with Active-MQ and Mysql tables in my actual working code-base.

--Success just means you've solved the wrong problem. Nearly always.

I'm sorry, I just received a late update (at great cost) from Anthony Baxter.

The last keynote from Linux.conf.au was about the upcoming Python "We'll break all your code" 3k. Perhaps not quite keynote material, but it covered a gamut of issues which will break old code when the move happens - and he did work for a colorful company. The set & dict comprehensions, function annotations and dictionary views are probably worth the terrible loss of reduce(), my favourite companion to map(). And obviously old style classes & string exceptions were excess fat to be trimmed anyway. But there was more interesting code to test.

>> from __future__ import braces File "<stdin>", line 1 SyntaxError: not a chance >> import this

And check the output twice.

--I believe a little incompatibility is the spice of life, particularly if he has income and she is pattable.

-- Ogden Nash

posted at: 04:13 | path: /conferences | permalink |

DHCP makes for bad routing. My original problems with DHCP (i.e name resolution) has been solved by nss-mdns, completely replacing my hacky dns server - ssh'ing into hostname.local names work just fine.

But sadly, my WiFi router does not understand mdns hostnames. Setting up a tunnel into my desktop at home, so that I could access it from office (or australia for that matter), becomes nearly impossible with DHCP changing around the IP for the host.

UPnP: Enter UPnP, which has a feature called NAT Traversal. The nat traversal allows for opening up arbitrary ports dynamically, without any authentication whatsoever. Unfortunately, there doesn't seem to be any easily usable client I could use to send UPnP requests. But nothing stops me from brute-hacking a nat b0rker in raw sockets. And for my Linksys, this is how my POST data looks like.

<?xml version="1.0" ?>

<s:Envelope s:encodingStyle="http://schemas.xmlsoap.org/soap/encoding/" xmlns:s=

"http://schemas.xmlsoap.org/soap/envelope/">

<s:Body>

<u:AddPortMapping xmlns:u="urn:schemas-upnp-org:service:WANIPConnection:1">

<NewRemoteHost/>

<NewExternalPort>2200</NewExternalPort>

<NewProtocol>TCP</NewProtocol>

<NewInternalPort>22</NewInternalPort>

<NewInternalClient>192.168.1.2</NewInternalClient>

<NewEnabled>1</NewEnabled>

<NewPortMappingDescription>SSH Tunnel</NewPortMappingDescription>

<NewLeaseDuration>0</NewLeaseDuration>

</u:AddPortMapping>

</s:Body>

</s:Envelope>

And here's the quick script which sends off that request to the router.

--Air is just water with a lot of holes in it.

While working towards setting up the Hack Centre in FOSS.in, I had a few good ideas on what to do with my "free" time in there. The conference has come and gone, I haven't even gotten started and it looks like I'll need 36 hour days to finish these.

apt-share: I generally end up copying out my /var/cache/apt/archives into someone else's laptop before updating their OS (*duh*, ubuntu). I was looking for some way to automatically achieve it with a combination of an HTTP proxy and mDNS Zeroconf.

Here's how I *envision* it. The primary challenge is locating a similar machine on the network. That's where Zeroconf kicks in - Avahi service-publish and the OS details in the TXT fields sounds simple enough to implement. Having done that, it should be fairly easy to locate other servers running the same daemon (including their IP, port and OS details).

Next step would be to write a quick HTTP psuedo-cache server. The HTTP interface should provide enough means to read out the apt archive listing to other machines. It could be built on top of BaseHTTPServer module or with twisted. Simply put, all it does is send 302 responses to requests to .deb files (AFAIK, they are all uniquely named), with appropriate ".local" avahi hostnames. And assuming it can't find it in the local LAN, it works exactly like a transparent proxy and goes upstream.

Now, that's P2P.

WifiMapper: Unlike the standard wardriving toolkit, I wanted something to map out and record the signal strengths of a single SSID & associate it with GPS co-ordinates. A rather primitive way to do it would be to change the wireless card to run in monitor mode, iwlist scan your way through the place & map it using a bluetooth/USB gps device (gora had one handy).

But the data was hardly the point of the exercise. The real task was presenting it on top of a real topological map as a set of contour lines, connecting points of similar access. In my opinion, it would be fairly easy to print out an SVG file of the data using nothing more complicated than printf. But the visualization is the really cool part, especially with altitude measurements from the GPS. Makes it much simpler to survey out a conference space like foss.in than just walking around with a laptop, looking at the signal bars.

To put it simply, I've got all the peices and the assembly instructions, but not the time or the patience to go deep hack. If someone else finds all this interesting, I'll be happy to help.

And if not, there's always next year.

--Nothing is impossible for the man who doesn't have to do it himself.

-- A. H. Weiler

Browsing through my list of unread blog entries, I ran into one interesting gripe about lisp. The argument essentially went that the lisp parenthesis structure requires you to think from inside out of a structure - something which raises an extra barrier to understanding functional programming. And as I was reading the suggested syntax, something went click in my brain.

System Overload !!: I'm not a great fan of operator overloading. Sure, I love the "syntax is semantics" effect I can achieve with them, but I've run into far too many recursive gotchas in the process. Except for a couple of places, scapy packet assembly would be one place, I generally don't like code that uses it heavily. Still, perversity has its place.

So, I wrote a 4-line class which lets me use the kind of shell pipe syntax - as long as I don't break any python operator precedence rules (Aha!, gotcha land). The class relies on the python __ror__ operator overload. It seems to be one of the few languages that I know of which distinguishes the RHS and LHS versions of bitwise-OR.

class Pype(object):

def __init__(self, op, func):

self.op = op

self.func = func

def __ror__(self, lhs):

return self.op(self.func, lhs)

That was simple. And it is pretty simple to use as well. Here's a quick sample I came up with (lambdas; I can't live without them now).

double = Pype(map, lambda x : x * 2) ucase = Pype(map, lambda x: string.upper(x)) join = sum = Pype(reduce, lambda x,y: x+y) x = [1,2,3,4,5,6] | double | sum y = "is the answer" | ucase | join print x,y

And quite unlike the shell mode of pipes, this one is full of lists. Where in shell land, you'd end up with all operations talking in plain strings of characters (*cough* bytes), here the system talks in lists. For instance, the ucase pype actually gets a list with ['i','s' ...]. Keep that in mind and you're all set to go.

Oh, and run my sample code. Maybe there's a question it answers.

--Those who do not understand Unix are condemned to reinvent it, poorly.

-- Henry Spencer

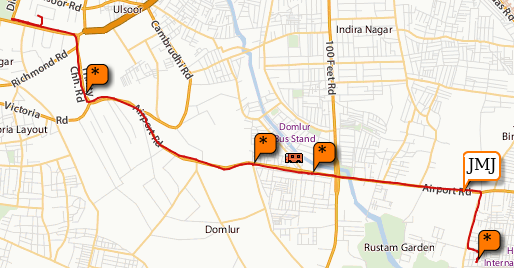

A few months back I bought myself a cycle - a Firefox ATB. For nearly two months before heading out to Ladakh, I cycled to work. One of those days, I carried yathin's GPS along with me. So yesterday night, I dug up the GPX files, out of sheer boredom (and inspired by one of shivku's tech talks). After merging the tracks and waypoints, I managed to plot the track on a map with the help of some javascript. Here's how it looks.

I have similar tracklogs from Ladakh, but they have upwards of 2000 points in each day, which do not play nicely with the maps rendering - not to mention the lack of maps at that zoom level. I need to probably try the Google maps api to see if they automatically remove nodes which resolve to the same pixel position at a zoom level.

I've put up the working code as well as the gpx parser. To massage my data into the way I want it to be, I also have a python gpx parser. And just for the record, I'm addicted to map/reduce/filter, lambdas and bisect.

--If you want to put yourself on the map, publish your own map.

Most wireless routers come without a DNS server to complement their DHCP servers. The ad-hoc nature of the network, keeps me guessing on what IP address each machine has. Even more annoying was the ssh man-in-the-middle attack warnings which kept popping up right and left. After one prolonged game of Guess which IP I am ?, I had a brainwave.

MAC Address: Each machine has a unique address on the network - namely the hardware MAC Address. The simplest solution to my problem was a simple and easy way to bind a DNS name to a particular MAC address. Even if DHCP hands out a different IP after a reboot, the MAC address remains the same.

Easier said than done: I've been hacking up variants of the twisted.names server, for my other dns hacks. To write a completely custom resolver for twisted was something which turned out to be trivial once I figured out how (most things are), except the dog ate all the documentation from the looks of it.

class CustomResolver(common.ResolverBase):

def _lookup(self, name, cls, type, timeout):

print "resolve(%s)" % name

return defer.succeed([

(dns.RRHeader(name, dns.A, dns.IN, 600,

dns.Record_A("10.0.0.9", 600)),), (), ()

])

Deferred Abstraction: The defer model of asynchronous execution is pretty interesting. A quick read through of the twisted deferred documentation explains exactly why it came into being and how it works. It compresses callback based design patterns into a neat, clean object which can then be passed around in lieu of a result.

But what is more interesting is how the defer pattern has been converted into a behind-the-scenes decorator. The following code has a synchronous function converted into an async defer.

from twisted.internet.threads import deferToThread

deferred = deferToThread.__get__

@deferred

def syncFunction():

return "Hi !";

The value a returned from the function is a Deferred object which can then have callbacks or errbacks attached to it. This simplifies using the pattern as well as near complete ignorance of how the threaded worker/pool works in the background.

53: But before I even got to running a server, I ran into my second practical problem. A DNS server has to listen at port 53. I run an instance of pdnsd which combines all the various dns sources I use and caches it locally. The server I was writing obviously couldn't replace it, but would have to listen in port 53 to work.

127.0.0.1/24: Very soon I discovered that the two servers can listen at port 53 on the same machine. There are 255 different IPs available to every machine - 127.0.0.2 is the same as localhost, but the different IP means that pdnsd listening on 127.0.0.1:53 does not conflict with this. Having reconfigured the two servers to play nice with each other, the really hard problem was still left.

RARP: The correct protocol for converting MAC addresses into IP addresses is called RARP. But it is obsolete and most modern OSes do not respond to RARP requests. One of the other solutions was to put a broadcast ping with the wanted MAC address. Only the target machine will recieve the packet and respond. Unfortunately, even that does not work with modern linux machines which ignore broadcast pings.

ARPing: The only fool-proof way of actually locating a machine is using ARP requests. This is required for the subnet to function and therefore does work very well. But the ARP scan is a scatter scan which actually sends out packets to all IPs in the subnet. The real question then was to do it within the limitations of python.

import scapy: Let me introduce scapy. Scapy is an unbelievable peice of code which makes it possible to assemble Layer 2 and Layer 3 packets in python. It is truly a toolkit for the network researcher to generate, analyze and handle packets from all layers of the protocol stack. For example, here's how I build an ICMP packet.

eth = Ether(dst='ff:ff:ff:ff:ff:ff') ip = IP(dst='10.0.0.9/24') icmp = ICMP() pkt = eth/ip/icmp (ans,unans)=srp(pkt)

The above code very simply sends out an ICMP ping packet to every host on the network (10.0.0.*) and waits for answers. The corresponding C framework code required to do something similar would run into a couple of hundred lines. Scapy is truly amazing.

Defer cache: The problem with flooding a network with ARP packets for every dns request is that it simply is a bad idea. The defer mechanism gives an amazing way to slipstream multiple DNS requests for the same host into the first MAC address lookup. Using a class based decorator ensures that I can hook in the cache with yet another decorator. The base code for the decorator implementation itself is stolen from the twisted mailing list.

Nested Lambdas: But before the decorator code itself, here's some really hairy python code which allows decorators to have named arguments. Basically using a lambda as a closure, inside another lambda, allowing for some really funky syntax for the decorator (yeah, that's chained too).

cached = lambda **kwargs: lambda *args, **kwarg2: \

((kwarg2.update(kwargs)), DeferCache(*args, **(kwarg2)))[1]

@cached(cachefor=420)

@deferred

def lookupMAC(name, mac):

...

The initial lambda (cached) accepts the **kwargs given (cachefor=420) which is then merged into the keyword arguments to the second lambda's args eventually passing it to the DeferCache constructor. It is insane, I know - but it is more commonly known as the curry pattern for python. But I've gotten a liking for lambdas ever since I've started using map/reduce/filter combinations to fake algorithm parallelization.

After assembling all the peices I got the following dnsmac.py. It requires root to run it (port 53 is privileged) and a simple configuration file in python. Fill in the MAC addresses of the hosts which need to be mapped and edit the interface for wired or wireless networks.

hosts = {

'epsilon': '00:19:d2:ff:ff:ff'

'sirius' : '00:16:d4:ff:ff:ff'

}

iface = "eth1"

server_address = "127.0.0.2"

ttl = 600

But it does not quite work yet. Scapy uses an asynchronous select() call which does not handle EINTR errors. The workaround is easy and I've submitted a patch to the upstream dev. With that patch merged into the scapy.py and python-ipy, the dns server finally works based on MAC address. I've probably taken more time to write the script and this blog entry than I'll ever spend looking for the correct IP manually.

But that is hardly the point ![]() .

.

What's in a name? that which we call a rose

By any other name would smell as sweet;

-- Shakespeare, "Romeo and Juliet"

X11 programming is a b*tch. The little code I've written for dotgnu using libX11 must've damaged my brain more than second-hand smoke and caffeine overdoses put together. So, when someone asked for a quick program to look at the X11 window and report pixel modifications my immediate response was "Don't do X11". But saying that without offering a solution didn't sound too appealing, so I digged around a bit with other ways to hook into display code.

RFB: Remote Frame Buffer is the client-server protocol for VNC. So, to steal some code, I pulled down pyvnc2swf. But while reading that I had a slight revelation - inserting my own listeners into its event-loop looked nearly trivial. The library is very well written and has very little code in the protocol layer which assumes the original intention (i.e making screencasts). Here's how my code looks.

class VideoStream:

def paint_frame(self, (images, othertags, cursor_info)):

...

def next_frame(self):

...

class VideoInfo:

def set_defaults(self, w, h):

...

converter = RFBStreamConverter(VideoInfo(), VideoStream(), debug=1)

client = RFBNetworkClient("127.0.0.1", 5900, converter)

client.init().auth().start()

client.loop()

Listening to X11 updates from a real display is that simple. The updates are periodic and the fps can be set to something sane like 2 or 3. The image data is raw ARGB with region info, which makes it really simple to watch a particular window. The VNC server (like x11vnc) takes care of all the XDamage detection and polling the screen for incremental updates with that - none of that cruft needs to live in your code.

Take a look at rfbdump.py for the complete implementation - it is hardcoded to work with a localhost vnc server, but it should work across the network just fine.

--You can observe a lot just by watching.

-- Yogi Berra

Finally got around to getting a debug build of libgphoto2. After a couple of hours of debugging, the problem turned to be one of design rather a real bug. I had to try a fair bit to trace the original error down to the data structure code. This is code from gphoto2-list.h.

#define MAX_ENTRIES 1024

struct _CameraList {

int count;

struct {

char name [128];

char value [128];

} entry [MAX_ENTRIES];

int ref_count;

};

And in the function gp_list_append(), there is no code which can handle possible spills. As it turns out, I had too many photos on my SD card - in one directory. The assumption that a directory contains only 1024 photos was proven to be untrue - for my SD450.

Breakpoint 3, file_list_func (fs=0x522a60,

folder=0x5a3660 "/store_00010001/DCIM/190CANON", list=0x2b11e6c38010,

data=0x521770, context=0x523d90) at library.c:3933

(gdb) p params->deviceinfo->Model

$2 = "Canon PowerShot SD450"

(gdb) p params->handles

$3 = {n = 1160, Handler = 0x528c90}

So, the code was exiting with a memory error because it ran out of 1024 slots in the folder listing code. When I explained my problems on the #gphoto channel, _Marcus_ immediately told me that I could probably rebuild my gphoto2 after changing MAX_ENTRIES to 2048 - I had already tried and failed with that. As it turns out there are two places which have MAX_ENTRIES defined and even otherwise, the libraries which use gphoto2 have various places which allocate CameraList on the stack with a struct CameraList list;, which introduces a large number of binary compatibility issues with this. But after I rebuilt libgphoto2 and gphoto2, I was able to successfully download all my photos onto my disk using the command line client, though in the process I completed b0rked gthumb.

And you've definitely gotta love the gphoto2 devs - look at this check-in about 15 minutes after my bug report.

--The capacity to learn is a gift;

The ability to learn is a skill;

The willingness to learn is a choice.

-- Swordmasters of Ginaz

I've been playing around with twisted for a while. It is an excellent framework to write protocol servers in python. I was mostly interested in writing a homebrew DNS server with the framework, something which could run plugin modules to add features like statistical analysis of common typos in domain names and eventually writing up something which would fix typos, like what opendns does.

To my surprise, twisted already came with a DNS server - twisted.names. And apparently, this was feature compatible with what I wanted to do - except that there was a distinct lack of documentation to go with it.

7 hours and a few coffees later, I had myself a decent solution. Shouldn't have taken that long, really - but I was lost in all that dynamically typed polymorphism.

from twisted.internet.protocol import Factory, Protocol

from twisted.internet import reactor

from twisted.protocols import dns

from twisted.names import client, server

class SpelDnsReolver(client.Resolver):

def filterAnswers(self, message):

if message.trunc:

return self.queryTCP(message.queries).addCallback(self.filterAnswers)

else:

if(len(message.answers) == 0):

query = message.queries[0]

# code to do a dns rewrite

return self.queryUDP(<alternative>).addCallback(self.filterAnswers)

return (message.answers, message.authority, message.additional)

verbosity = 0

resolver = SpelDnsReolver(servers=[('4.2.2.2', 53)])

f = server.DNSServerFactory(clients=[resolver], verbose=verbosity)

p = dns.DNSDatagramProtocol(f)

f.noisy = p.noisy = verbosity

reactor.listenUDP(53, p)

reactor.listenTCP(53, f)

reactor.run()

That's the entire code (well, excluding the rewrite sections). Should I even bother to explain how the code works ? It turned out to be so childishly simple, that I feel beaten to the punch by the twisted framework. To actually run it in server mode, you can start it with twistd -y speldns.py and you have your own DNS server !

In conclusion, I hope I have grossed a few of you out by trying to do soundex checks on dns sub-domains.

--DNS is not a directory service.

-- Paul Vixie

For the entire weekend and a bit of Monday, I've been tweaking my di-graphs to represent flickr entries and in general, that has produced some amazing results. For example, I have 700+ people in a Contact of a Contact relationship and nearly 13,000 people in the next level of connectivity. But in particular, I was analyzing for cliques in the graph - a completely connected subgraph in which every node is connected to every other. For example, me, spo0nman, premshree and teemus was the first clique the system identified (*duh!*).

Initially, I had dug my trusty Sedgewick to lookup graph algorithms and quickly lost myself in boost::graph land. STL is not something I enjoy doing - this was getting more and more about my lack of const somewhere rather than real algorithms. And then I ran into NetworkX in python.

NetworkX is an amazingly library - very efficient and very well written. The library uses raw python structures as input and output and is not wrapped over with classes or anything else like that. The real reasons for this came up as I started using python properly rather than rewrite my C++ code in python syntax. When I was done, I was much more than impressed with the language than the library itself. Here are a few snippets of code which any C programmer should read twice :)

def fill_nodes(graph, id, contacts):

nodes = [v.id for v in contacts]

edges = [(id, v.id) for v in contacts]

graph.add_nodes_from(nodes)

graph.add_edges_from(edges)

def color_node(graph):

global cmap

node_colours = map(

lambda x: cmap[graph.degree(x) % cmap.length],

graph.nodes())

Or one of the more difficult operations, deleting unwanted nodes from a graph.

# trim stray nodes

def one_way_ride(graph):

deleted_nodes = filter(

lambda x: graph.degree(x) == 1,

graph.nodes())

deleted_edges = filter(

lambda x: graph.degree(x[0]) == 1 or

graph.degree(x[1]) == 1,

graph.edges())

graph.delete_edges_from(deleted_edges)

graph.delete_nodes_from(deleted_nodes)

The sheer fluidity of the lambda forms are amazing and I'm getting a hang of this style of thinking. And because I was doing it in python, it was relatively easy to create a cache for the ws requests with cPickle. Anyway, after fetching all the data and all this computation, I managed to layout the graph and represent it interactively, in the process forgetting about clique analysis, but that's a whole different blog entry anyway.

--The worst cliques are those which consist of one man.

-- G. B. Shaw

I've been playing around with some stuff over the weekend, which eventhough runs in a browser, needs continous updates of data while maintaining state. So I tried to a socket-hungry version of server push which has been called COMET. Now, this technique has come into my notice because of a hack which bluesmoon did, except I had to reinvent for python what CGI.pm did for bluesmoon by default.

But first, I mixed over a couple of the client side bits. Instead of relying on XHR requests, which are all bound & gagged by the security model, I switched to a simpler cross-domain IFRAME. But what's really cool here is that I use a single request to push all my data through, in stages, maintaining state. So here's a bit of code, with technical monstrosity hidden away.

#!/usr/bin/python import sys,time from comet import MixedReplaceResponse content = "<html><body><h1>Entry %d</h1></body></html>"; req = new MixedReplaceResponse() for i in range(0,10): req.write(content % i) req.flush() time.sleep(3) req.close()

The MixedReplaceResponse is a small python class which you can download - comet.py. But the true beauty of this comes into picture only when you put some scripting code in what you send. For example here's a snippet from my iframe cgi code.

wrapper = """

<html><body> <script> if(window.parent) {

%s

} </script></body></html>

""";

script = ("window.parent.updateView(%s);" % json.write(data))

req.write(wrapper % script)

req.flush()

As you can clearly see, this is only a minor modification of the json requests which I'd been playing with. But underneath the hood, on the server side, this is a totally different beast, totally socket hungry and does not scale in the apache cgi model. Interesting experiment nonetheless.

Now, if only I could actually host a cgi somewhere ...

--To see a need and wait to be asked, is to already refuse.

I was battling with some code that used instanceof() in python. I don't where that's a built-in (I suspect jython), but it doesn't work on my python 2.4.3. While I was wondering about this problem, I tried something outlandish and it worked. But before I actually explain what I did, take a closer look at this module (x.py).

def identity(h):

return h

def coolfunc(p):

return identity(p)

That was pretty standard code, right ? Now, to use/abuse this module in y.py.

import x

print x.coolfunc("Hello World !")

x.identity = lambda x : 42;

print x.coolfunc("What is 21 + 21 ?")

As you can probably guess, I can override (uhh.., pollute) x.identity with my own functions, which opens up a solution to my original problem. Pretty simple, now that I think of it.

othermodule.instanceof = lambda x,y : type(x) == y

I hope this has made your friday a little bit more surreal :)

--Talent to endure stems from the ignorance of alternatives.

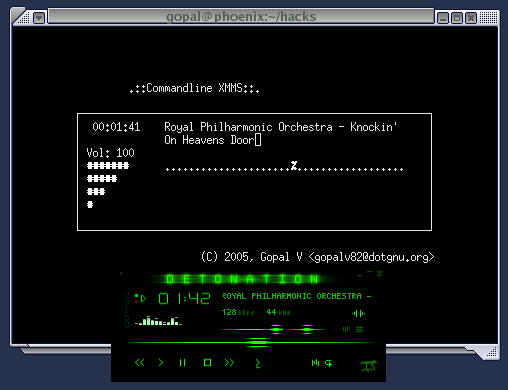

I usually work on the command line. 80x25 columns are a lot easier to read than the X terminals with fonts. With my old box, I used to use mpg321 to play music on Alt+F6 terminal. mpg321 sucks totally here - but xmms works well, except for an application xmms uses obsolete OSS audio interface warning in the kernel. Also these days, a lot of what I have are .ogg files. I don't really like mplayer - mainly because it doesn't let me enqueue songs, like xmms does. I could use xmms easily, if I didn't have to switch back to X to skip a song or reduce the volume.

Necessity is the mother of invention, but I didn't have to invent an entire media player here. I had the xmms-remote code in my distfiles directory and I started poking in there. Before soon, I discovered that I had python bindings installed as well. The API is amazingly easy to use. I wrote the xmms code in about 10 minutes - doing curses without any documentation took some time (thank $deity for the test_curses.py)

You can get my original 100-odd lines of cxmms.py. It is pretty much self-explanatory, except for the gratuitous use of lambda. Also it is a good example of how to wait for user input while other screen updates are required. And I want to use the j search shortcut - any volunteers to hack that into the current code ?

It was now 4 AM saturday morning. Sleep is slowly starting to become an optional requirment for me. I went back to the bed and picked up the book to continue reading and the next page had these lines in it.

He was in the company of ... another man who would stay up all night in order to invent an alarm clock to wake him up in the morning.

I got distracted by this while I was hacking away in jit-interp.c trying to fix stack allocation ordering. Reminds me of the last chapter of So Long and Thanks for all the Fish.

Maybe I should trade in this life and ask for a refund ?

--There was a point to this story, but it has temporarily escaped the chronicler's mind.

-- Douglas Adams